PROJECT: Ambulant Sounds / Sounds in Space [2015-19]

Sounds in Space was a 3.5 year project exploring the nature of sound, location and orientation in space. Essentially what happens when we allow sound to exist relative to you, and have a tangible presence.

The Sound in Space technology allows you to place virtual sounds in a 3D space using a mobile phone. Once the sounds are placed, audience members can walk around the space wearing headphones to hear the sounds, all of which are binaural and respond to the person’s movement within the space.

We outsourced all the code so that it can be used for your own experiments - it is (surprisingly) well documented at the GCL Github: https://github.com/googlecreativelab/sounds-in-space

On Sound.

- excerpted from Debbie’s Design Matters podcast.

Tea: SO we're in your studio, but we're wearing headphones, so the sound gets very distorted. It's not natural sound, and it feels unnatural to be wearing headphones in this small little room. And you can move between those sounds and that can allow you to understand the sound, understand the context of the space you're in differently. It can affect how you experience the information.

Tea: Just the idea of moving the sounds so that we could make it sound like we're in a vast concert hall. And then you begin to understand how if you can do that with objects in space, all of this stuff with AR and VR, we're always listening. We might be able to see it on a screen in the thing, but unless you can hear it in exactly the right place, and we can't hear it in exactly the right place because the sound isn't coming from that place.

Tea: It's being generated artificially, and it can't be artificially generated specifically for you, because your ears are a funny shape. So, because sound is subjective to you, that thing of putting a sound in space precisely so that it works, becomes an incredibly challenging exercise. And it's one that I'm enjoying enormously. I think we'll get our ears scanned.

Tea: In a few years time, I think you'll get your ears scanned just like you get your eyes tested, and that you'll have headphones which literally adapt to the inner structure of your ear, and allow you mainly entirely for the purpose of augmented reality, that sounds can begin to come from space, from very precise spaces. And they're going to need to know the shape of your ear in order for it to come from that precise space.

Debbie: So, customized listening.

Tea: Yeah, customized listening. Because then information doesn't need to come into you from a screen. It can begin to exist as sound.

Debbie: Is this something that you're working on at Google?

Tea: We’re really interested in all the AR core stuff, and anchoring sounds in space, and how you orient. There are things that humans do really well. Filtering sensory perception being one, biases and filtering being another one, and understanding your location in space is another one.

You begin to realize how our understanding of reality, objective reality, is really reliant on those skills happening in our brain. So until we can begin to allow our little supercomputers in our pocket to understand where they are in space, and to understand how we experience sensory perception, not a default for humans, then these kind of experiences are still limited.

And when we do begin to play with those spaces, it's amazing, because you start to put information into the real world, in a way that you are experiencing it. I always talk about sliding doors, the opening and shutting doors, in that it's a great example of augmented reality. You walk towards the thing, the thing understands your intent, which is to walk through the door, and the mechanics of the door, open the door. Or sometimes they don't.

There's no real reason where that can't ... That sort of intent, and you think about how much information we know about you, and the patterns that you exist in on a daily basis. And for me, I like playing in cultural spaces. So theater gets massively changed when you move into a space where you've got whole other metalayers of information around the performance, or books move into an interesting space.

PROJECT: Collector Cards [2014]

Collector Cards was another pre-NFT experiment into creating (in this instance) fungible tokens of value that fed back into creator ecosystems and that could be shared between phones easily.

It was entirely inspired and powered by Google’s purchase of Bump - a brilliant bit of near-field tech that something horrible happened to once we bought it.

Sadly the whole project was deader on arrival than the teams that actually worked on Bump although we did persevere longer than we should have. (again).

PROJECT: BITESIZE [2015]

Bitesize is another of those projects that died because however intuitive, inventive, daring or simply fun they were - they weren’t what the company wanted us to make. Also (always) because the platform we used (Sheets in this case), and the platform we were using it to replace (Sites) didn’t see what we were doing as genius. They just saw it as a bug, a backdoor, a security threat. A threat basically.

Which is, in my opinion, why apps should never leave beta and should always be ready to become something else.

There is no documentation on Bitesize. It was over an extended period, strangled at birth. It was sad. My thoughts and prayers are still with Jonny Richards and Jude Osborn

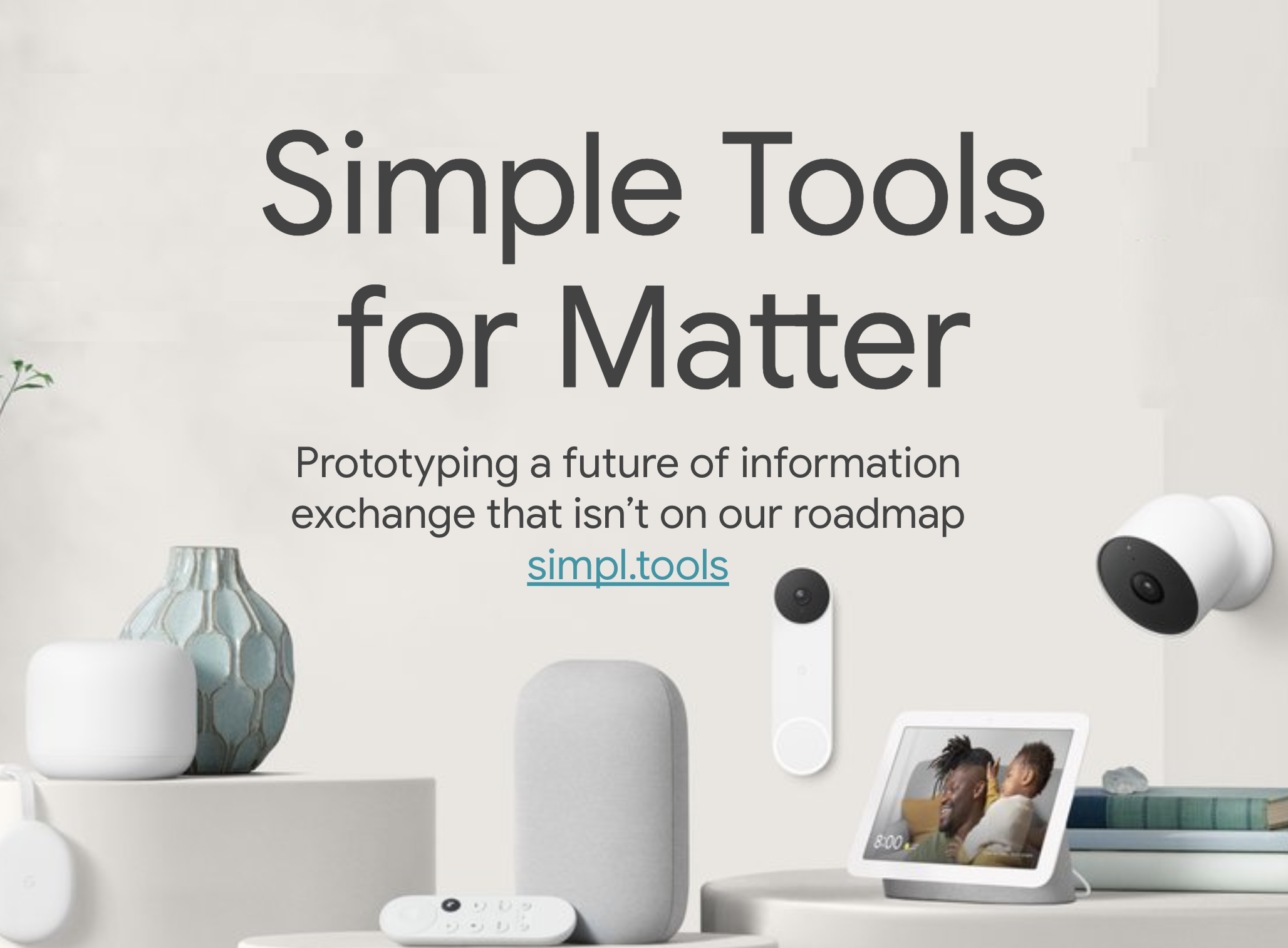

PROJECT: Simpl.tools [2022]

Having spent 10 years being a nuisance about how most digital technology would work better if it used other aspects of the human sensory umwelt than the 3” focal area we dedicate to our our phones. So with the arrival of Google’s Matter in 2022 a unifying alliance between a complex myriad of of competing IOT technologies it felt like a golden opportunity to course-correct on the future of personal- and ubiquitous-computing.

products/google-nest/new-in-google-home-io22/

Matter is an open standard for smart home technology that lets your device work with any Matter-certified ecosystem using a single protocol.

If that doesn’t mean much to you then don’t worry - it’s just another catastrophic missed opportunity to make the world a little less screen obsessed and reduce our diet of gamified misinformation and dopamine fuelled consumerism. The technology is there, the possibilities are endless, the benefits are vast - but it is not sexy or cool - or, more importantly, it is not more profitable than selling phones so, it is, effectively evolving like the cognitive equivalent of the climate crisis.

What do I mean?

Well, we could change the world. Make information ubiquitous, neutral, equitable. Or, more simply, we should make digital more invisible. We should want to improve the world we live in. But we don’t. Somethings seem so obvious, and I often think that my inability to convey that is a profound personal failure.

BOOK: EAP - We Kiss The Screens [2018]

For our final volume in the Editions at Play collaboration with Anna & Britt @ Visual Editions .. the final “book that cannot be printed” we, well, printed it. Uniquely, depending on how you read it. For the first 250 readers only.

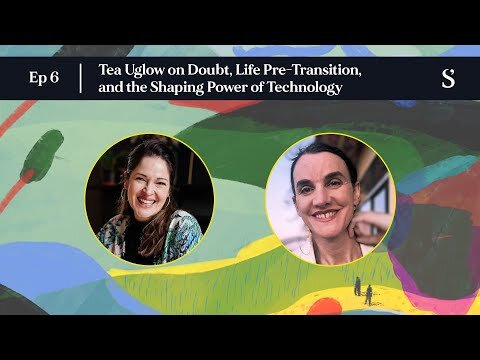

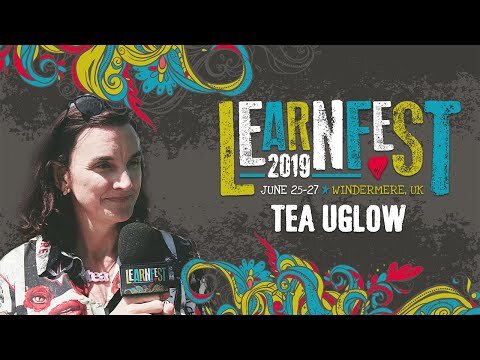

All the Talks

PROJECT: Photo Book [2020]

BOOKS: Great LGBTQ Speeches →

TALK: Pathology of Identity [2020]

TALKS: RARE [2020]

PROJECT: RESPECT [2018]

In 2016 I started become increasingly alarmed by the prospect of ‘generative’ machine learning in a new prototcol called GPT2 and the reframing of that technology [“deep fakes” -> GenAI] . The inherent biases were already present in commercial products and came directly from the corpus used to train the models (basically reddit & quora). I wrote about that internally and was told it was like “being in a tank, throwing hand-grenades at other people in the tank”.

© Haylie Craig

The next reframing obscured that further - [“big data” -> LLMs]. In 2017 Tara McKenty first proposed this project which other queer and trans folk within Creative Lab like Haylie Craig and Melissa Lu turned into Project Respect. We spent much of 2018 lobbying for resources to address the issue.

I am delighted to say it was picked up by Google.org in 2019 and turned into a worldwide program (this is true) - but clearly something got fucked up along the way because nothing else happened apart from all the Google AI ethicists who kept getting fired, at least not visibly. We know that these issues are still and will never be remedied as we sail towards an aggregated, centre-conservative, monocultural post-truth future. ChatGPT is not a threat, it is not a problem… it was those things - in 2016. Today, in 2024, it is just a fuck up. A fuck-up like leaded petrol, asbestos, or chlorofluorocarbons - another dose of globally transformative ingenuity.

PROFILE: Cannes Lions President of Glass [2023] - Campaign Brief →

The Cannes Lions International Festival of Creativity has announced the names of the Jury Presidents who will lead juries to award this year’s Lions and set the global benchmark for excellence in creativity.

Tea Uglow, founder of Dark Swan, former CD at Google will represent Australia as jury president of Glass Lions: The Lion for Change. The Glass Lion recognises work that implicitly or explicitly addresses issues of gender inequality or prejudice, through the conscious representation of gender in advertising.

Read MorePROFILE: PRCA [2022]

Since launching the PRCA’s LGBTQ+ Role Models series we’ve had the privilege of both talking to and sharing the stories of incredible leaders in our industry. It’s almost unbelievable that our 2020 workplace advocacy report—in partnership with YouGov—found that while inclusivity is improving, 29% of survey respondents were not aware of any LGBTQ+ colleagues holding senior leadership positions.

Today is International Transgender Day of Visibility, founded in 2009 to raise awareness of the discrimination towards transgender people around the world, marginalised for embracing their true identity.

As a group, we believe that acceptance is only the first step to progress. Now is the time to speak up and celebrate one another for the differences that make us unique, creative and special. We don’t all have to agree with one another all the time – or any of time – but we all have an equal right to a voice, an opinion and to be listened to.

With that, we bring you words from one of the great creative minds in our industry at the moment: Tea Uglow, Creative Director of Google’s Creative Lab in Sydney.

What is your greatest achievement in life?

I don't have any great achievements. I am very proud of small things that matter to me, like a production of A Midsummer Night’s Dream with the Royal Shakespeare Company, or a blockchain project / digital book called The Universe Explodes with Visual Editions in 2018.

I am really proud of my book Loud & Proud - an anthology of speeches from the first 150 years of gay liberation. I didn't know when I started how much of gay rights was born and devised in Germany in the 19th century, or how they made up the words homo- and heterosexual, or that the first gay rights movement in the US didn't occur until 1924 and that was led by a German too. And there are all these amazing, amazing people so it was such a joy to dive into.

What is your greatest professional achievement?

I don't have any great professional achievements. We won a Peabody award for the books we made during the five years window of EditionsAtPlay.com. Is that a professional thing? I feel like saying I got nominated for a special bonus for work on Chrome, or Search, or Photos. Or YouTube. I did meet the Queen after we built her YouTube channel. And I got to build the Vatican's first YouTube channel as well.

In what way, if at all, do you believe being a member of the LGBT community has affected the professional opportunities you’ve had?

Oh. I find that I can now work on Gay for Google around the world as a side-hustle. And more of my time is spent writing up articles about the challenges of being female, or trans, or bisexual, or queer, or autistic. Less so, mental health. My company’s awareness of my mental health has been the most damaging to my career - I am wrapped in cotton wool and intellectually gaslit whilst losing everything that mattered to me.

But it is hard to say so, because now, as a woman, I cannot complain. Being autistic I am easy to dismiss out of hand whenever I get particularly focused on one aspect of injustice or another. As a person, all these labels have helped lift me out of a place of total despair - but it is very interesting to see how powerfully goodwill can compound discrimination and make you feel increasingly distanced and excluded.

I think the British government have had the biggest impact on my life. I am a child of Thatcher and a casualty of Section 28. From 1988 it became illegal in the UK to teach awareness of LGBTQ issues in schools, and as a result the culture of fear meant that no aspect of my puberty or childhood ever mentioned gay people or bisexuality, or trans people. Section 28 should be seen like we look at the unthinkable 1960’s psychology experiments on children. Every child in the country took part in that experiment - there was no opt out.

I feel that any adults in that position sounded so scared that children, like me, knew far more about Santa than they did about queers. Even years later, I still didn’t really know how little I knew. Instead of being educated, I was home-schooled by Ace Ventura, growing up ignorant of myself, and utterly illiterate. Section 28 lasted 25 years.

If it had been an experiment at least we would have data. The deep psychological scars so many of my age carry would have borne fruit, however bitter. But it wasn’t, so no one gets to learn a thing. And you cannot look at the singularly English version of transphobia without thinking that maybe, just maybe, a complete absence of social and sexual education may have left a mark there too. This is a battle I will fight all my life - not the one with the TERFS - the one with my own deep-rooted disgust and self-loathing.

What positive change have you seen or personally managed to effect for the LGBT community over the course of your career?

I actually feel like the gay flag emoji made a huge difference and it was the starting gun on my desire to get a transgender pride flag emoji. It took over four years and a small but amazing group of people. Seeing Monica Helms' flag in the beta releases last year was a day that I cried about.

More prosaically, I am proud of getting Google to extend their trans healthcare worldwide. I am quite old, so my career has a lot of space to SEE things. When I think most seriously about the question, I think that the repeal of Section 28 in 2004 and, less stand-out but a huge sweeping impact has been in medicine - the life, personal and professional success of a very dear friend called John. In 2000, when he was diagnosed HIV positive, we all assumed he would be dead within five years or so. We mourned. That he is still here is a testament to progress, to science, to evidence-based treatment

What do you hope the near future holds for progress in inclusivity in the communications industry and how can individuals make a difference?

I'm about to begin a project to push for the erasure of gendered pronouns (just he/she. his and hers). They are unnecessary and dangerous words. It might be another one of these autistic obsessions, but I cannot understand why any woman demands that 'she' be labelled as a demographic that is distinct from the dominant and which provides a level of discrimination that is so ingrained, so visible, and evidenced in every data set.

It is a violent inequity, and it is enforced primarily by defining every human into one of two camps. In almost no situation does that distinction make a difference. We have learnt to eradicate, or at least acknowledge the implications of describing a person's skin pigmentation, why are we still adamantly defending the necessity of describing their gender? I think if you dealt with the far simpler problem of a new word for a plural 'they' (like, theys) then we could limit the structural discrimination that we know exists but can do so little to resolve.

PROFILE: Design Matters [2020]

Design Matters: Tea Uglow

It’s hard to describe exactly what Tea Uglow does. But know this: She has your dream job. Within Google, as has been said before, she is, essentially, paid to play.

The gig didn’t come easily. Uglow started as a Fine Art student, came across design and navigated the tides of the Dotcom boom and Dotcom bust, and then grabbed the laptop from her severance package and taught herself HTML. She bounced around a few design jobs, and then happened upon a one-month contract position to make Powerpoints for the sales team at Google.

… And then she founded Google’s Creative Lab in Europe.

How?! In this episode of Design Matters, Debbie Millman explores just that—and, of course, digs into what Uglow does today as creative director of Google’s Creative Lab in Sydney.

Uglow’s work may not be the easiest thing to nail down in a nutshell, and it’s best seen in action. So here we present a tapestry of Tea, from her personal writings to a medley of her striking projects that reveal the key to her swift rise and all the rest of it: her raw brilliance.

Midsummer Night’s Dreaming“The Royal Shakespeare Company put on a unique, one-off performance of ‘Midsummer Night’s Dream’ in collaboration with Google’s Creative Lab. It took place online, and offline—at the same time. It was the culmination of an 18 month project looking at new forms of theater with digital at the core.”

XY-Fi “XY-Fi allows you to mouse-over the physical world, with your phone.”

Editions at Play “Editions At Play is the Peabody Futures–award-winning initiative by Visual Editions and Google’s Creative Lab to explore what a digital book might be: one which makes use of the dynamic properties of the web.”

Hangouts in History “Google’s Creative Lab teamed up with Grumpy Sailor to help a class of year 8 students from Bowral ‘video conference’ with 1348, in what we became the first of five ‘Hangouts in History.’”

The Oracles “The Oracles is a cross-platform experience, developed for primary school children in Haringey. Digital and physical environments are blended, alternating between gameplay and visits to Fallow Cross, where enchanted objects know where you are so that your moves trigger the story.”

Story Spheres “Story Spheres is a way to add stories to panoramic photographs. It’s a simple concept that combines the storytelling tools of words and pictures with a little digital magic.”

Bar.Foo “Google has a secret interview process …”

Debbie talks with Tea Uglow about experimental digital projects that are pushing the boundaries of tech and art. “This is very much the principal with all my projects, just draw a line in the sand and put a flag there. Then at least someone might come over and look at the line and ask ‘What’s the flag for?’”

PROJECT: SIS - Agatha Gothe Snape [2019]

TALKS: Why matters more than what [2020]

Tea Uglow shares her thoughts on the direction that technology is taking on a global scale and how her role at Google’s Creative Labs has more to do with studying human nature than one might think. Before taking to the Design Indaba Conference stage, Uglow chatted about misconceptions folks have about the way technology is evolving and what propels her own creative thinking.

PROJECT: ARIA [2019]

ARIA is one of those projects that are monumental, career-works for everyone working on them or around them — but get lost when exposed to the stupefying dazedness of everyday digital flotsam.

For a real insight into why this project mattered I recommend Sean Kelly’s blog - the actual genius behind the scenes.

Opera Queensland Partners with Google Creative Lab to Bring the Opera Home with AR and AI

Australia’s Opera Queensland worked with Google Creative Lab KI in an augmented reality and AI-powered demo project that brings the stage experience of the opera to the four walls of your home using augmented reality and artificial intelligence.

With the prototype AR app, opera fans no longer have to line up or even travel to the theater to enjoy an opera production. The project will allow opera enthusiasts to turn their homes into a theater stage and experience the opera via augmented reality.

Opera enthusiasts will now enjoy performances from the comfort of their homes

The AR experimental app, dubbed Project AR-ia, was created with a version of The Magic Flute that’s performed by the Australian opera singers Emma Pearson, Brenton Spiteri and Wade Kernot and the performance was accompanied by the Brisbane’s Chamber Orchestra, Camerata.

Opera Queensland hopes to use the technology to not only demystify opera and make it more accessible, thereby bringing it to newer audiences. The Project AR-ia experimental augmented reality app shows how the opera art can adapt with the times and be enjoyed by audiences anywhere and at any time.

The app engages audiences in various ways and revolutionizes how we experience the music form. While attending the opera has entailed dressing up and travelling with loved ones to the theater, future opera enthusiasts will be able to simply watch the performances from the comfort of their homes with AR avatars performing for them.

Opera Queensland approached Google in early 2018 in a bid to explore the creation of diverse opera experiences through new technology. The opera wanted to give all enthusiasts a front-row seat in the opera from the comfort of their homes. To realize this, the performances of the opera singers have been completely digitized in 3D as a first step. The technology leverages Google’s AI-based volumetric recording system known as The Relightables which was unveiled recently.

The images of the opera actors were captured using 90 high-resolution cameras and numerous depth sensors from multiple perspectives. An algorithm subsequently created an animated 3D model of the person captured from the many individual images.

The digital person or avatar created is then projected into the user’s real environment through Google’s smartphone AR software. This is how the user is able to get a private screening of Mozart’s Magic Flute in their living rooms.

The technical challenge faced by the developer was that of the high data outlay. According to the developer, just 20 seconds of the spatial film material consumed more than a terabyte of data which had to be computed and compressed even further.

Even after the data compression, one second of the volumetric opera still required about five megabytes. Three opera singers that are animated at 30 frames per second required about 450 megabytes of data per second. This is equivalent to about 4.5 gigabytes for every ten seconds. This roughly corresponds to the data volume of a complete high-resolution 2D film.

This massive data throughput required for the streaming of the opera must played a role in the developers not releasing the prototype augmented reality opera. The project itself began as a storytelling experiment for a new kind of experience exploring how to make art accessible to a greater number of people. The work on the AR formats is set to continue as the demo app is at the forefront of creative technology and will require significant investment and development over a duration of time that will help propel it beyond the prototype stage.

PROFILE: Sydney Morning Herald [2020]

Lunch with Google creative director Tea Uglow

Before our lunch at the Sydney Opera House, where Google Creative Director Tea Uglow will next month be one of the headline speakers at the All About Women festival, I receive a handy guide titled: “What to expect when you’re expecting Tea.”

It is for people who are new to working with the head of Google’s Creative Labs in Sydney: a transgender woman who indentifies as queer.

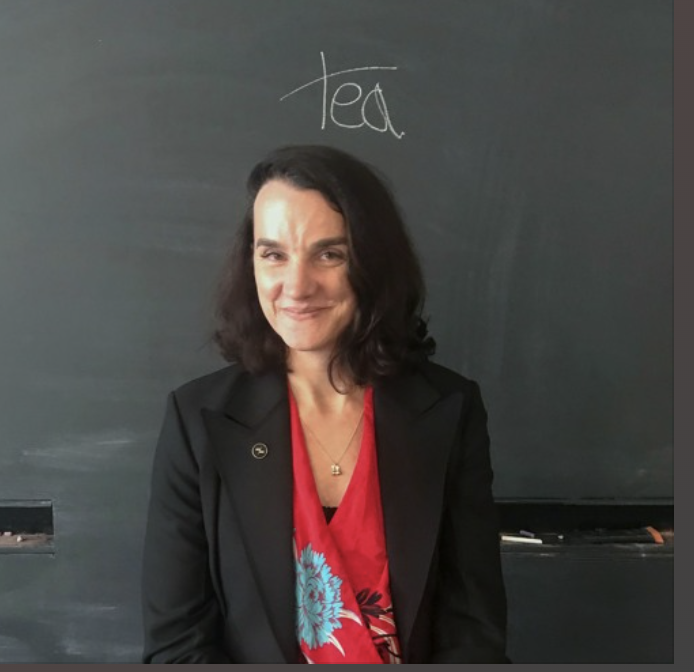

Tea Uglow, Creative director of Google.CREDIT:LOUSIE KENNERLEY

“I am happy for you to know (and to talk to us) about this – rather than guess because it’s helpful (for all of us),” the document explains and goes on to list some specifics.

Pronunciations of names: Tea (like the drink); surname U-glow like You-Glow as in Unicorn. Preferred pronouns: she / they . Links to the times she has written and spoken publicly about the transition from a man called Tom to a woman called Tea which started in 2016.

“It was certainly a complicated few years … After a lifetime of thinking I was unusual (doesn’t everyone?) or at least an unusual boy (as in teetotal, chocolate-loving, vegetarian, yogi feminist) it turns out I was completely normal just not 100 per cent boy,” the document explains with refreshing candour and a self-depreciation that I will come to understand as trademark

The cheat sheet even covers what her children – two boys aged eight and five – call her: Tea, rather than mum or dad. Why does parenting need to be gendered, she asks in the same manner she will encourage her audience at All About Women, which takes place on International Women’s Day, to consider how technology can be harnassed to change gender stereotypes.

It is such a concise compendium of questions and answers, I wonder if we will have anything to talk about when I arrive at the Portside Restaurant.

When we meet we discuss the fact that our companies used to share the same office space in Pyrmont, as Google expanded taking up more office floors, our company office space shrank, a metaphor reflecting the changing fortunes of our industries she suggests. The company she joined in 2006 pretty much as a start-up, is notorious for providing nap rooms and free food cooked by chefs but she tells me if she’s in the office she will usually just have a cheese sandwich.

Uglow, now 45, started working at Google in London’s Soho office, when it was half a standard office floor and a far cry from the tech giant it is today employing about 200,000 worldwide.

Advertisement

“When I first joined we got free lunch but it was just a baked potato. It was a group of people who wanted to change the world via tech and it has changed the world of the internet, creating search engines and building browsers ... When I look back I am very proud of all we have done,” she says.

We order sparkling mineral water and sourdough bread with Pepe Saya butter to start and decide to share a salad and a main: Heirloom tomato, Burrata Stracciatella, olives, confit onion dressing with oregano and Truffled orecchiette pasta, cauliflower polonaise with brown butter.

Truffled orecchiette pasta, cauliflower polonaise with brown butter at the Portside restaurant.CREDIT:LOUISE KENNERLEY

She sees her job as creative director, sometimes as head of a team of 100 people, as more like a gardener, who nurtures “geeks like me” and helps them grow. She’s recognised as a gifted communicator, a TedX talk she gave has been viewed 1.7 million times, and is often asked to address large crowds. She first spoke publicly about her transgender journey in July 2018 in a talk called “how to lead when you don’t know what you’re doing”. In it she lined up all her Google security passes and put them in a Powerpoint slide to illustrate her transition from Tom to Tea in pictures. She included it to show how true creativity, as with the scientific inquiry she oversees at Google, means not knowing where you are going, and to not be afraid to take risks. Throughout life, Uglow has always taken the “least boring option.”

Born in 1975 in Kent, southeast England, to a father who was an academic and a mother in publishing, Uglow, is the eldest child with two younger brothers, a sister and a half brother. From the age of six she taught herself computer coding from magazines.

Growing up she played rugby and studied ballet with her sister, went to an all boys school, where she was head boy, then headed to Oxford University to study fine arts (also coaching the women’s rugby team while there). Here they were told only two of them in the class would make it as artists, so she decided to head in a different career direction: “one where you could get a job.”

After a string of different dotcom jobs, she took a contract for a month making Powerpoint slides at Google, where her off-the-charts intellect, found a home with “fellow geeks”. She has worked at the internet Behemoth ever since, at the intersection of art and technology, moving to Sydney in 2012, with her former Australian-born partner and mother of her children.

The impact of her transition on her family has been immense and complicated. She wrote the tips for “dealing with Tea”, to make things a bit simpler for everyone.

“It’s a bit like coding, it is complex but it is also exciting. Shouldn’t that be what being alive is,” she says.

The only people her trans status really impacts is her and her “long suffering family”, who it is clear she is keenest to protect. Most people aren’t bothered when she speaks openly, she said.

Finding her voice as a transgender women has helped her emerge as a leader in that community, and she’s been stunned by the tweets and letters she’s received in support.

“If that’s what leadership is I’ll take it,” she says.

When lunch arrives on this hot summer day we eat slowly, and she talks about her sons and the hope that they grow up in a world where they are not defined by their gender. She’s written a book already on doubt, but another called Loud and proud, will be published in May, which brings together 50 speeches from the past 150 years of LGBTQI activism. It is dedicated to the affirming parents of transgender children.

Heirloom tomato, Burrata Stracciatella, olives, confit onion dressing with oregano at the Portside Restaurant.CREDIT:LOUISE KENNERLEY

“I am uncomfortable with putting people in boxes according to their biology at birth. It is quite nice to know we live in a world that acknowledges it doesn’t have to be that way,” she tells me.

“I’ve done the white male privileged thing – boy scouts, rugby, drinking with the lads – and people asked why would you give it up? I tell them it’s not a choice. I drew the short hormonal straw in the womb, it’s not like you choose to become a chess prodigy or get cancer. I don’t want to be anything except happy. And I am happier. Reeling a bit, but happier.

“My idea of hell was going to a barbecue when the mums were at one end of the garden on blankets playing with the kids and I was down at the other end with the men drinking beer (I don’t drink alcohol), cooking meat (I’m a vegetarian) just thinking I don’t want to be here, I want to be with the women. I was fundamentally not interested in the conversations the men had. I’ve always preferred what women talk about and most of my friends are female – I just prefer the company of women.

“I have had some mental health issues but they are about the fact I spent 30 years pretending the things I felt were twisted and broken. When I stopped pretending, denying and hiding part of myself that felt broken it was great.

“In my case I need to acknowledge Tom exists and that it is important to not keep him in the cellar the way he kept me in the cellar. But I am not ashamed of who I am anymore … Transitioning was like a grief. It’s surprising how potent that grief is,” she says.

She believes it is only convention and language that defines and creates the expectations we have of men and women, not individuals. As for gender in the tech world, Uglow’s unique perspective will be what she will talk about at the Opera House next month.

“I’ve been on both sides. I’ve seen women not behave well to other women in the workplace, and men playing the ‘guy card’ leaning on their physicality like you can in rugby. My suggestion to women would be don’t ‘lean in’ and play like the boys – we need to drop sexist views and language on both sides,” she says.

She sees gender as a spectrum (“I’m more fem on that spectrum”) like autism (she also is autistic.)

Google employee Tea Uglow speaking as a trans woman.CREDIT:LOUIE DOUVIS

“Autistic people often change the world because they are used to not belonging. We are very useful in the fight to change the world because we are so single minded,” she says.

Uglow has been working tirelessly for four years on developing a trans flag emoji. (We talk the day it is approved and she is elated.) “It’s a symbol to society we are here and we’re not going away,” she tells me.

After two and a half hours of talking so intensely our food goes cold before we order coffee, we are the only ones left at the restaurant when I ask for the bill. The conversation takes a slightly shallower twist toward fashion, Tea telling me what a delight it is to go shopping for women’s clothing compared to the staid options and limited colours for men. She’s wearing a silk Bianca Spender blouse, a necklace with her name Tea and a love heart, a Sunray pleated skirt, completed with billowing white hat and heeled sandals. She never understood why women used to wear heels given how uncomfortable they can be, but now she’s a convert and will carry Band aids in her handbags to deal with any discomfort. Our waiter bids us farewell with a “thanks ladies.”

“I’m always so thrilled and surprised when I hear that,” Tea tells me, leaving with a little extra bounce in her high-heeled steps.

Tea Uglow will be appear at All About Women on March 8.

PROJECT: PRECURSORS to a DIGITAL MUSE [2019]

Remember when we all got excited about GPT-2?

The Experiments: https://experiments.withgoogle.com/collection/aiwriting

No, we never made them publicly accessible. I felt [and continued to feel up to my departure in 2023] that generative AI is a net negative. That’s why we do experiments. Not that anyone listens to that part.

![TALK: Pathology of Identity [2020]](https://images.squarespace-cdn.com/content/v1/53005993e4b0673fce2744be/1711358456076-EBFDJKCWLIKQB227DLMT/image-asset.jpeg)